When we started building our own web applications we knew there would come a day when we would need to start getting serious about hosting our tools on the internet. In the early days it didn't matter. We could run everything on a single server and if there was an issue we didn't have enough customers who would notice. Everything has changed now.

Codebase, our code hosting application, has thousands of users using it every day as a central part of their workflow and if we have any issues it affects them in a very real way - they cannot do their work. As such, we strive to be online 24/7/365 - 100% of the time - and to hit this target we have to think very hard about our network infrastructure.

In this article I'm going to share with you some of our secrets.

We own 100% of our hardware. We don't use "the cloud". If we can't touch it, we don't use it as part of our infrastructure. We believe this approach has not only saved us a great deal of money but it has given us the edge when it comes to reliability. There's not often a day goes by when we don't hear about yet another failure in the cloud or a web service being taken offline because of issues with their hosting provider.

We use Dell-branded hardware throughout our setup. We have found the Dell hardware to be exceptionally reliable and cost-effective for us. When we have hardware issues, we can get replacements almost immediately (although we do stock on-site spares for all common components - hard drives, memory, power suppliers etc...).

We run our own network. In May 2013, we commissioned our own autonomous network which allows us to connect to multiple upstream connectivity providers and send & receive traffic over a number of diverse paths. This means if one upstream has issues or is performing some maintenance, we can take it offline and be completely unaffected. It's such a breath of fresh air for us and has put us firmly in the driving seat going forward.

Hardware fails so we run all our components in pairs. Each database server is actually two servers, each load balancer is two servers and each switch is two switches. Take our database servers as an example, we run two physical servers which constantly replicate data between them. Should one of them fail, the other is standing by to immediately take over it's responsibilities until our team can investigate fully.

The same applies to network hardware. We employ two core routers which work as a pair and both capable of routing all traffic to our entire network in the event one should fail.The same applies to switches, all servers are connected to at least two gigabit switches to protect against switch or network card failures.

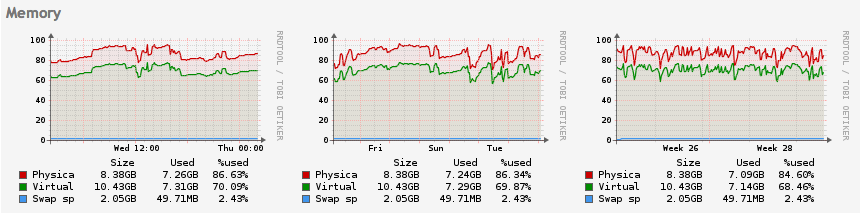

Monitoring is key. It's all well and good having the best hardware and the best network but shit happens and when you have lots of servers running lots of applications, it happens quite frequently. If you're not monitoring your systems, anything could happening and you won't know until something goes bang. We monitor every detail of each of our servers and if anything starts to become worrisome we're alerted and investigate before it becomes service affecting.

This monitoring happens 24/7/365 and, outside of office hours, our technical team will be alerted by SMS day or night.

We have backup hardware & networks in place. We host our core infrastructure in a fantastic reliable data centre which provides dual power feeds to our rack powered by different sets of infrastructure as well as having redundant cooling. However, we also have hardware in a second site with data being constantly replicated to hardware in our secondary location which can be brought online should any serious happen to our core location. This means that if something terrible should happen (touches wood), we can bring things back online in a sensible timeframe.

We keep in control of each and every server. In our early days, we had some fantastic scripts & tools which automated all our server configuration however we have found that some of this automation has actually been stopping us from doing certain things and debugging service affecting issues in a timely manner. These days we have a far less automated system although have added some server management features to our intranet, aTech Fusion. These features provide us with centralised management of access rights, file & folders as well as centralised firewall rules which can be pushed out automatically.